On paper, moving and raising your application to the cloud is easy. The harsh reality is that the only way to know for sure how it will do is to put it to the test. Benchmarking on-premises is difficult enough, but due to improvements in what’s revealed in PaaS settings, benchmarking in the cloud can be even more difficult. WorkloadTools is a collection of open-source tools for capturing, analyzing, and replaying workloads both on-premises and in the cloud.

This blog provides you a summary of Benchmarking concepts of SQL Server and Azure SQL with WorkloadTools. You will comprehend the topics such as what is a benchmark, what are the steps in the benchmarking process, the open-source tools for benchmarking, performing workload analysis, Benchmarking of SQL Server, and Benchmarking of Azure SQL. Get this SQL Server DBA Training course available online to get the practical experience to work with Benchmarking the SQL Server and Azure SQL with Workload Tools.

What is a benchmark?

Benchmarking is a method of evaluating the consistency and efficiency of your company’s goods, services, and processes. These metrics aren’t very useful on their own; they need to be compared to some kind of benchmark. A reference point.

The aim of benchmarking is to use the information gathered during the process to find areas where you can develop by:

- Identifying how and where other firms have been able to reach higher standards of productivity than your own.

- Examining the procedures and methods of your competitors and comparing them to your own.

- Using the data from your evaluations and comparisons to make improvements that will enhance the results, goods, and services of your business.

Cost per unit, time to manufacture each unit, quality of each unit, and customer satisfaction are all common areas to focus on for benchmarking analysis. These goals’ efficiency metrics can be compared to others to assist you to figure out the best ways to improve your operations.

Benchmarking process

Benchmarking is a five-step method that’s both clear and detailed:

- To benchmark, select a product, a service, or an internal department.

- Determine the best-in-class companies you can measure against – the companies with which you can compare your business.

- Obtain data on their internal results, also known as metrics.

- Compare the data from both organizations to find performance differences in your business.

- Adopt the procedures and practices used by the best-in-class companies.

Benchmarking will show you the improvements that will have the greatest impact, but it will be up to you to put them into action.

Open-source tools for benchmarking

Here is a few free benchmarking software that can be used to assess a wide variety of hardware and scenarios.

1. Indigo Benchmark — For content development and rendering

This benchmark comes highly recommended, particularly now that the PC and desktop computing war between AMD and Intel, as well as AMD and Nvidia, is at an all-time high. For some workloads like video rendering and content development, this benchmark can be used to put both your CPU and GPU to the test.

It is at the top of the list because it is cross-platform. It works on Mac OS X, Windows, and, of course, Linux. The software’s cross-platform design will also assist you in choosing the best operating system for your system, in addition to allowing you to compare different hardware choices.

2. Phoronix Test Suite

Phoronix has a more comprehensive range of benchmarking methods for almost every part of the framework. Furthermore, it is not only free to use but also fully open source. It comes with an extensible architecture that can accommodate any amount of different experiments you choose to run on your machine. For both sysadmins and laptop enthusiasts, it is incredibly powerful, scalable, and useful.

In addition, whether you’re new to benchmarking, the official Phoronix website includes an in-depth review of the procedures. I would personally suggest their new post on the effect of spectre and meltdown prevention fixes on your system’s results.

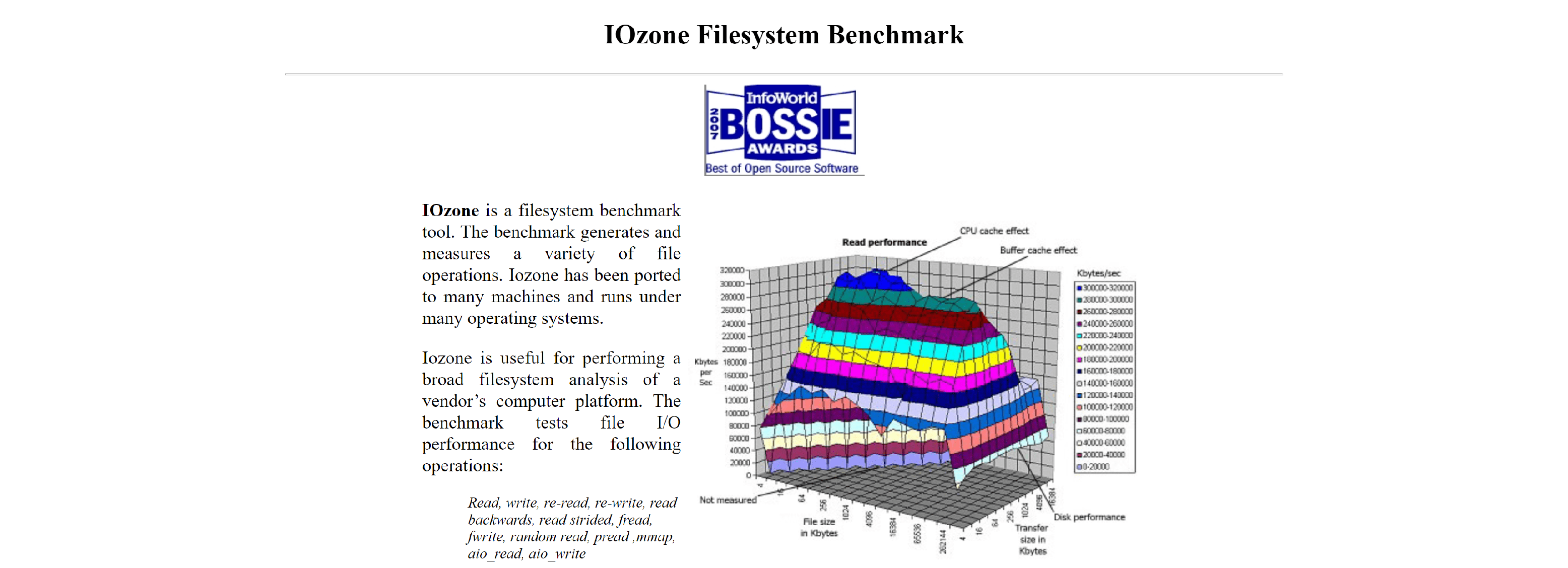

3. IOzone

Your SSDs are significant, even though they aren’t the most important concern when constructing a PC or a server. Faster SSDs result in more responsive systems. The explanation is clear. Modern CPUs and memory are fast enough that a program or data can be read or executed easily until it enters them.

Secondary storage, such as SSDs, are significant bottlenecks. Your experience would be slower the longer it takes for knowledge to hit your Processor.IOzone helps you to have a close look at how the storage is doing. When selecting your optimal SSD, consider sequential reads, sequential writes, and sporadic IOPs.

Video streaming workloads benefit from higher sequential reads, while databases benefit from higher random IOPs. As a result, benchmarking storage is never as simple as copying files to a disc.

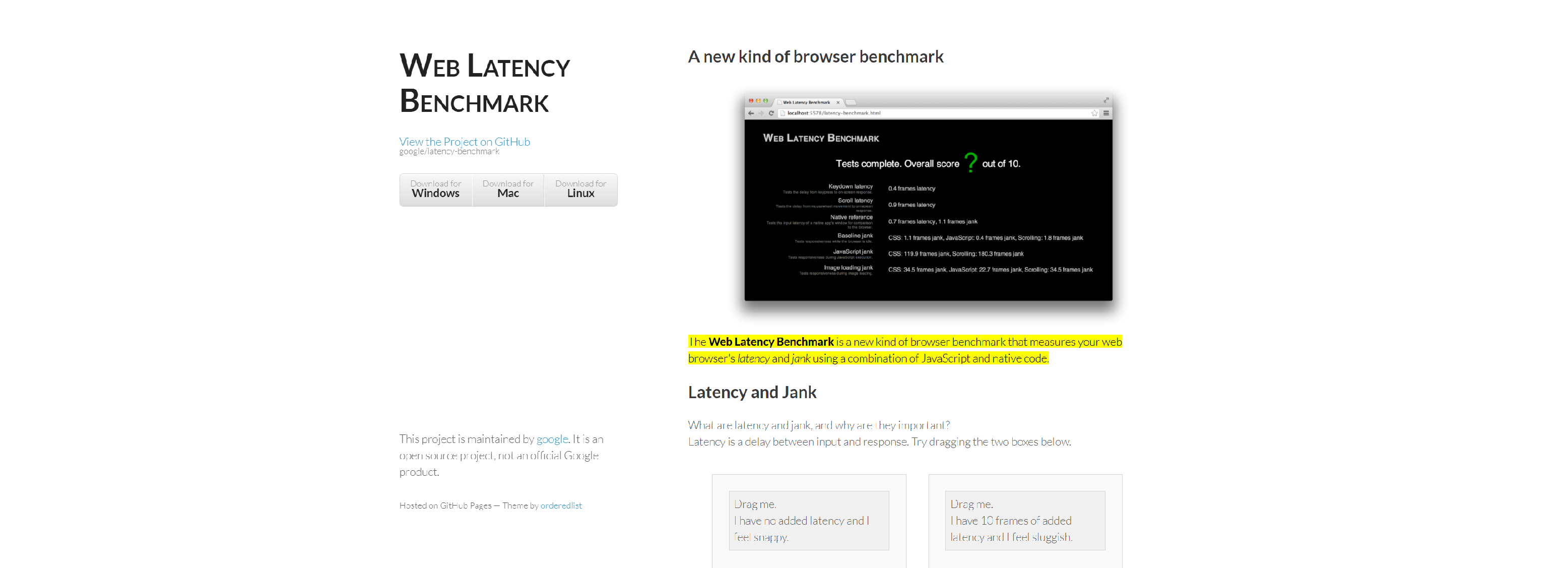

4. Web Latency Benchmark

We’ve learned a lot about data and computation, but networking is something we haven’t discussed. Though there are many tools available for network engineers to benchmark and track their 10G interfaces, I’d like to focus on a separate layer of networking.

Google’s Site Latency Benchmark is a consistency monitor for your web browser. When measuring the real-world efficiency of your web browser, this cross-platform benchmark is very helpful. The benchmark measures items like the time between keystrokes and window replies, scroll latency and jank, and a few other things.

We spend a lot of time with our browsers, so if the output of Firefox and Chrome varies even slightly, it’s worth taking the time to benchmark them and choose the best one.

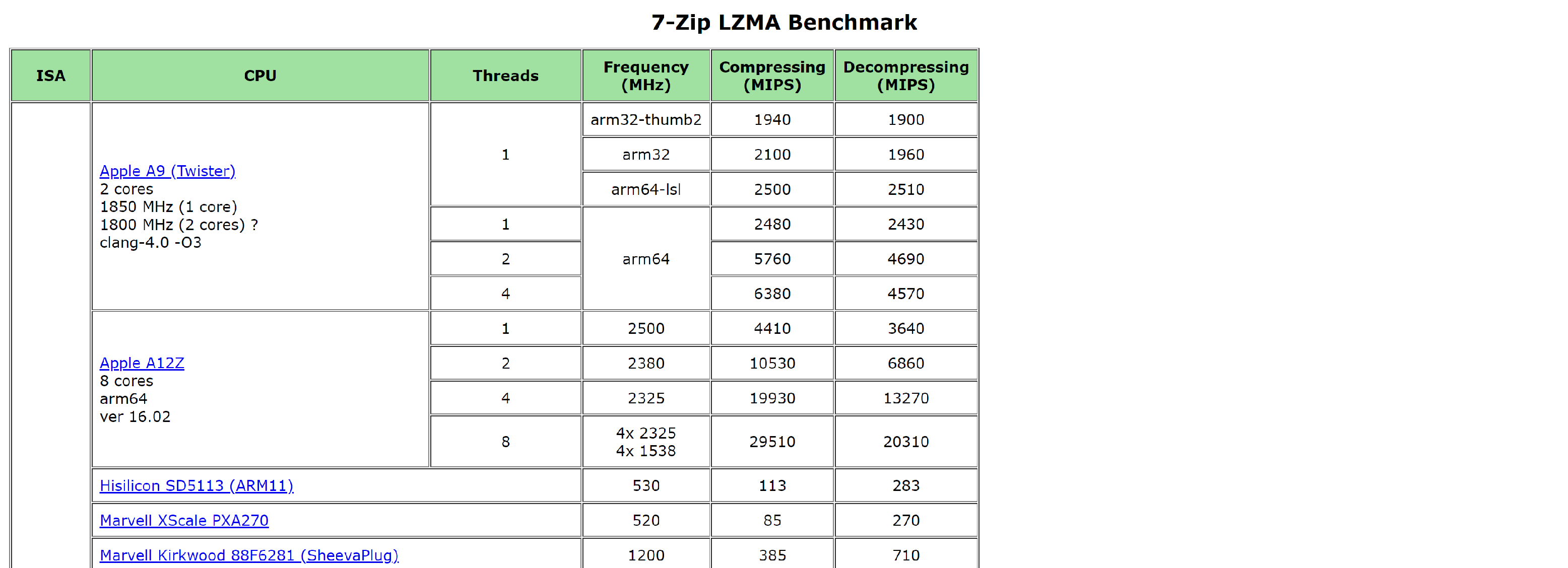

5. 7-zip

Yeah, the archiver 7-zip contains a benchmarking feature. Whether you have a lot of compressing and uncompressing in your workload. Then this is a benchmark that you should care about.

7-zip can be used to carry out more advanced tasks, such as password brute force attacks and dictionary attacks. 7-zip has a lot to say if you like to see the gap between your CPU and GPU when running these kinds of workloads (which can quickly be multithreaded).

Performing Workload Analysis

Workload Analyzer is used to offer query tuning advice as well as tips about how to customize database objects that aren’t doing well. Workload Analyzer is a Vertica application that analyses device data contained in Vertica system tables.

Workload Analyzer uses intelligent monitoring of query execution, workload history, tools, and settings to identify the root causes of inadequate query performance. The program then generates a series of tuning suggestions based on numbers, device, and data collector activities, and database/table/projection configuration. Using these instructions to quickly and easily enhance database efficiency.

There are two approaches to use Workload Analyzer:

- Call the Vertica function ANALYZE_WORKLOAD.

- Use the Management Console interface.

Benchmarking of SQL Server

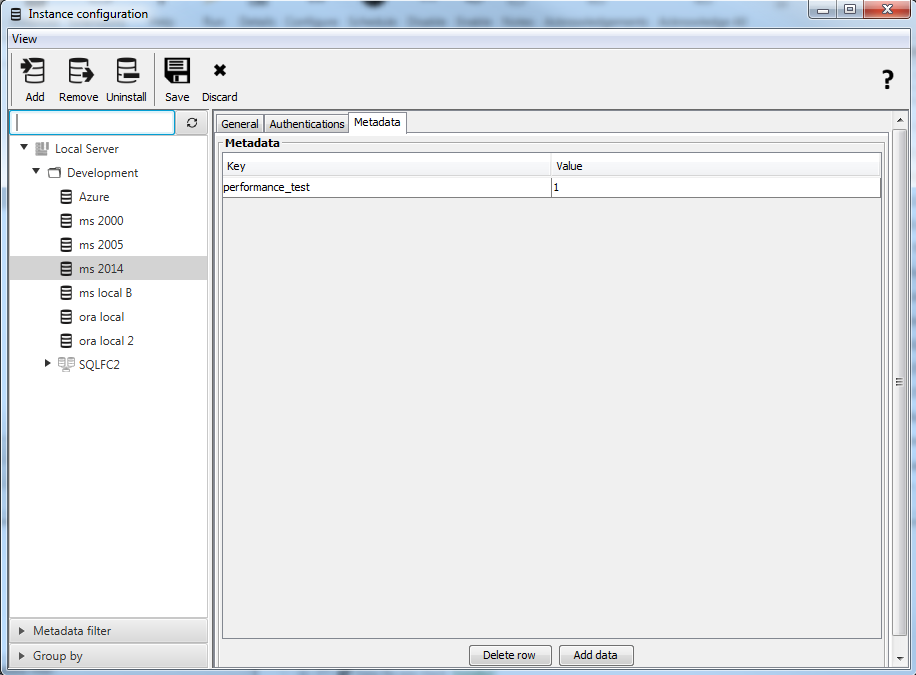

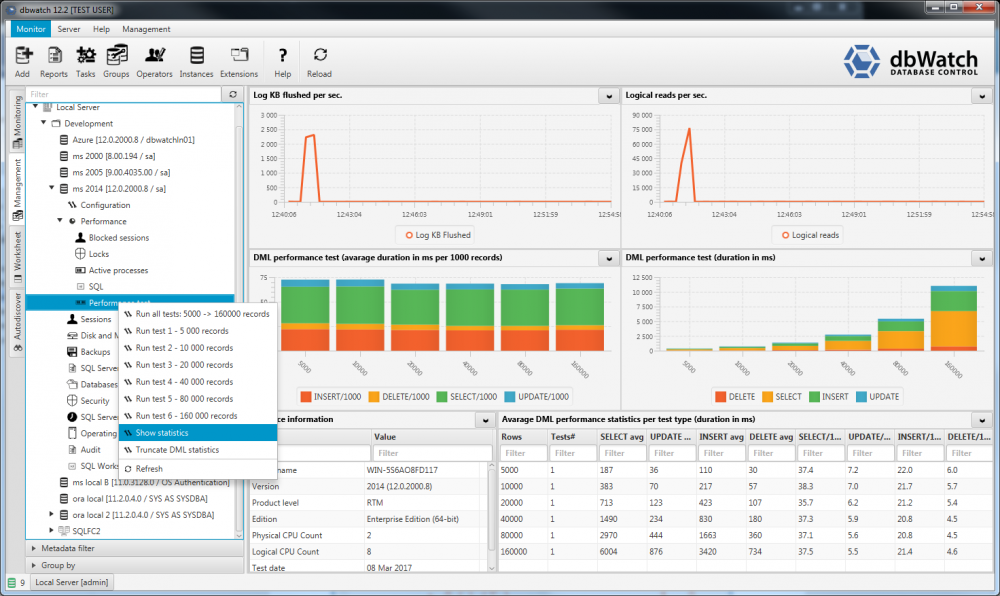

Dbwatch has a collection of metrics for evaluating the success of SQLServer instances. This benchmark runs a series of DML statements to test the fundamental properties of a database structure. This benchmark tests the fundamental properties of a database system by running a sequence of DML statements.

How will SQLServer efficiency be measured?

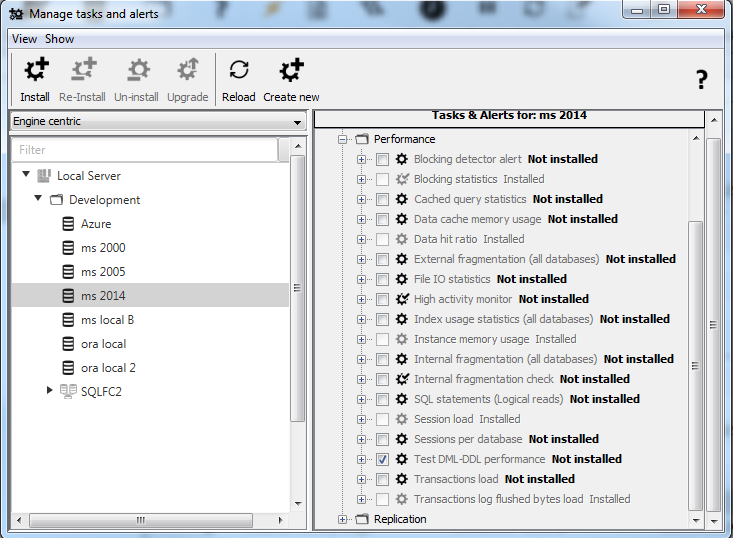

The “Test DML-DDL performance” tasks must be mounted on the instance of MS SQL Server before the performance test can be run.

The instance must then have the metadata “performance test” with the value “1” added to it. Choose “Configure” from the context menu while right-clicking on the case. Add metadata by clicking the “Tetadata” tab.

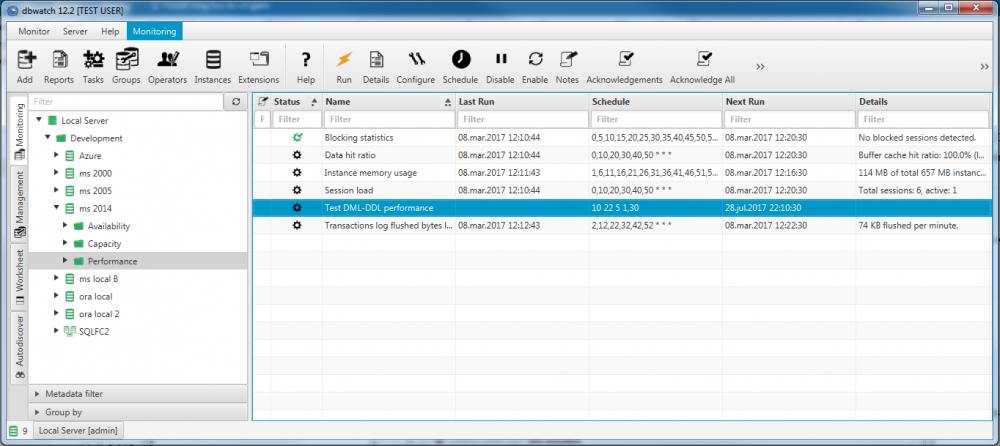

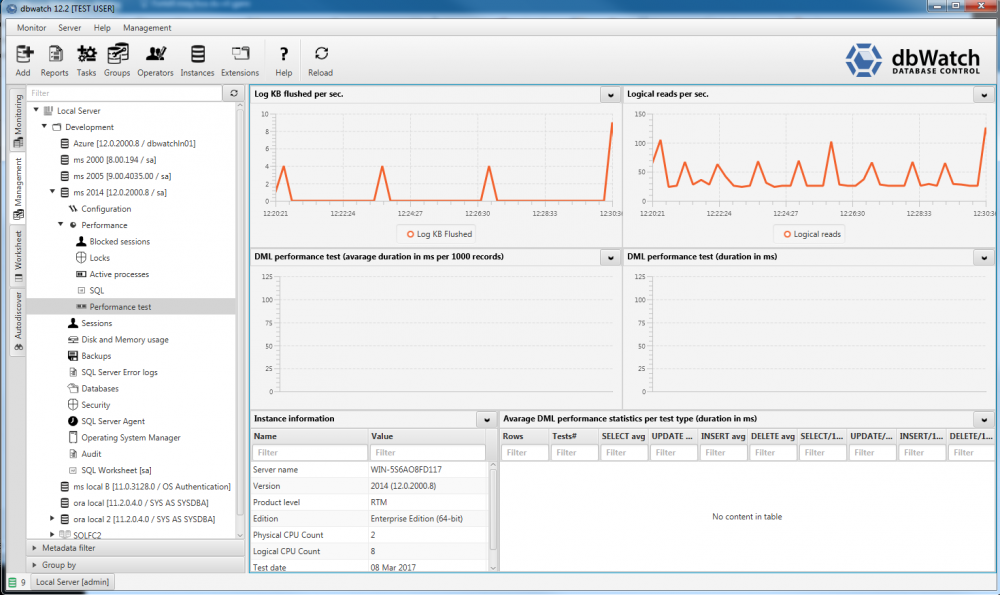

After applying the “performance test” metadata to the dbWatch Monitor GUI, go to the “Management” tab. Select the instance, and then click “Performance test” under the “Performance”. Now you are prepared to execute a test.

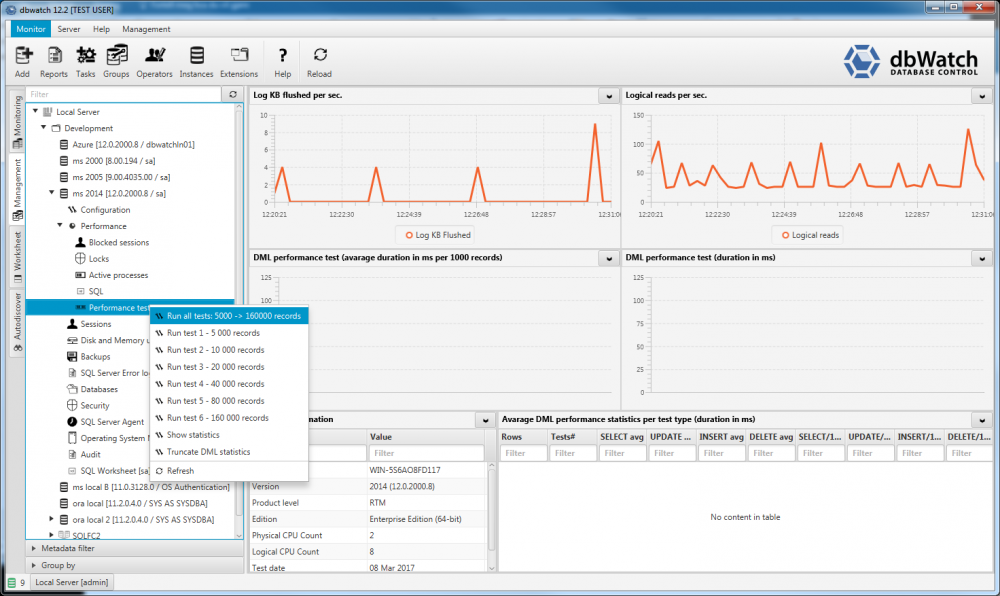

Select “Run all tests: 5000 – 160000 records” to run the whole test series.

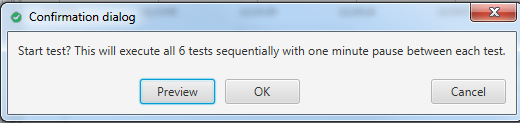

A validation box will appear, telling you that six tests will be performed in sequence, with a one-minute interval for each test.

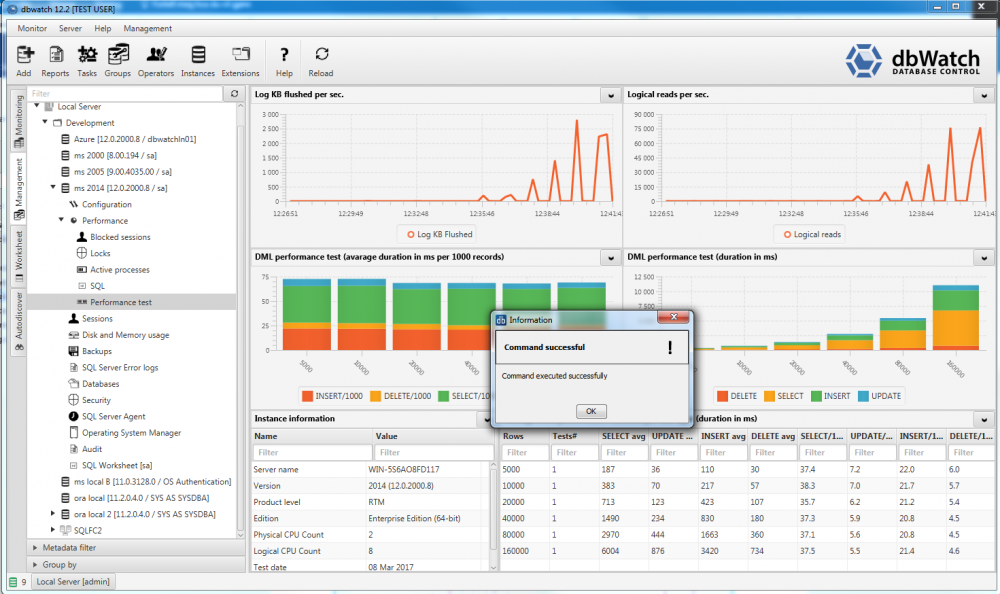

In just six minutes, you will have the statistics for performance.

The upper graphs display values mapped every 15 seconds over a 15-minute time interval. This series contains six test runs of 5K, 10K, 20K, 40K, 80K, and 160K rows. The chart shows how many “Logical reads” and “Log KB Flushed” per second are generated per second.

The middle graphs show the average speed of 1000 rows for each DML statement. (“INSERT,” “DELETE,” “UPDATE,” and “SELECT”), as well as the total time spent on each DML statement per test run.

The database name, version, edition, and test date are all shown at the bottom, along with the database details being verified. In the last table, you will find the test’s statistics.

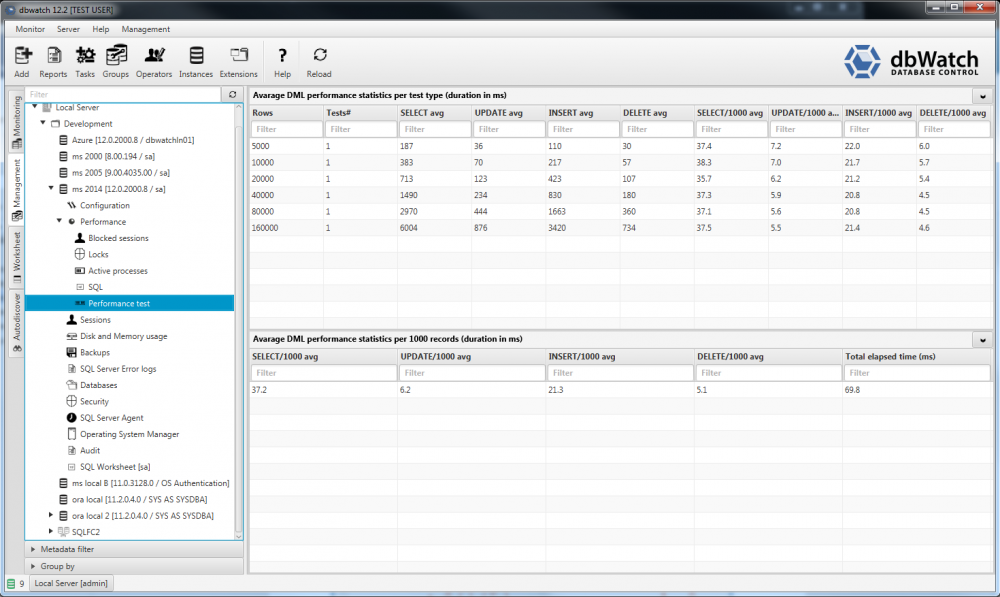

You can also display the statistics by navigating to the “Show statistics” menu.

The average values of 1000 rows per DML statement are shown in the bottom table, and the actual elapsed period in milliseconds is shown in the last column.

The “Total elapsed time (ms)” values (in our instance, 69.9 ms) show how quickly your system is.

Benchmarking of Azure SQL

Microsoft Azure SQL Database has three support tiers to choose from, each with different performance levels. Each output level offers a growing range of tools, or ‘power,’ aimed at delivering ever-increasing throughput.

It’s critical to be able to measure how each output level’s increasing strength transforms into better database performance. Microsoft has created the Azure SQL Database Benchmark (ASDB) to assist with this. The benchmark tests a variety of simple operations that can be used in any OLTP workload. The throughput obtained by databases operating at each performance level is measured.

Database Transaction Units (DTUs) are used to describe the resources and control of each service tier and performance level. DTUs are a means of representing an output level’s relative ability using a mix of CPU, memory, and read and write speeds. Doubling a database’s DTU rating is equivalent to doubling the database’s capacity. Through testing real database operations when scaling database capacity, the number of users, and transaction speeds in relation to the services available to the database, the benchmark helps one to measure the effect on database efficiency of increasing power offered by each performance standard.

It’s simpler to easily link the performance capacity of each service tier to the specifications of an application by communicating the throughput of the Simple service tier in transactions per hour, the Regular service tier in transactions per minute, and the Premium service tier in transactions per second.

ASDB evaluates a variety of simple database operations that are often used in online transaction processing (OLTP) workloads. The database schema, data population, and transactions were structured to be generally descriptive of the core elements most widely found in OLTP workloads, despite the fact that the benchmark was designed with cloud computing in mind.

Conclusion

We have covered the fundamental core principles that you’ll need to know when you learn to work with SQL Server or Azure SQL in this blog. We had clearly explained concepts of benchmark, the processing steps in benchmarking, open-source benchmarking tools, performing workload analysis, Benchmarking of SQL Server, and Benchmarking of Azure SQL in detail.