Big Data is a complex domain of computer science and analytics. Off late many top Silicon Valley companies are extensively concentrating on the Big Data Analytics and Cloud Computing. For a brief moment let us digress from the intricacies of Cloud Computing, and concentrate on the answer for the question “What Is Big Data Analytics?”

What Is Big Data Analytics

So, what does the term BIG DATA mean? Since the concept being extremely complicated, the basic meaning of Big Data can be deciphered as a collection of data (too HUGE amounts of unorganized information). The data is so large and complex, where analyzing it in the traditional methodologies is impossible. Therefore analyzing it can be referred to as Big Data Analysis.

Big Data Analytics Wiki

As a matter of fact several scholars, computer scientists strive for a simplex implementation of the information already held in the data sets, correctly. “There is always something inside, apart from what you see outside.”

In short, Big Data Analysis is a data entity where huge data sets collected are organized and analyzed for new. Analyzing the content of data in it – in order to use it for large scale implementation can be regarded as Big Data Analysis. Scientific researches produces tons of data, unfathomable amounts of data, which only a streamlined process or methodology can contain.

Challenges of Big Data

There are several challenges in maintaining the Big Data. Some include Analysis, Searching, Storage, Visualization, Transfer, Privacy issues etc.

The size of the data is neither constant nor consistent. It increases in an exponential fold every day and so comes the word Big Data. As of 2012, the size has increased from few dozens of terabytes to many petabytes. There is no limit to the data size. The only limitation as of today is, whether we could be able to manage the huge volume of additional data on a day-to-day basis.

Big Data cannot be managed by a single server. To manage these huge amounts of data generated daily, there is a necessity of several servers. Each server would have the capacity to hold the information. This is one of the biggest challenges that can be categorized under the term Storage (of the data).

Misconceptions on Big Data

Since Big Data cannot be explained totally, there are a few misconceptions. A single quote would explain all of them, which also happens to be my favorite, “Big data is like teenage sex. No one has a clue about what it is, but everyone at least pretends to be doing it.”

As with any emerging technology some of the misconceptions on Big Data analytics, or be it Big Data can be described as follows:

- Most of the people who analyse Big Data have a misconception on the process of analysing it. They try to analyse the data in the large sets with various internal and external sources to make it simple rather than trying it deeper in a sophisticated and complex way. Being any simpler gives no extraordinary results.

- It isn’t the solution to all the problems that an organization inherently has. It surely can handle some of them, but Big Data Analytics (or analysis) cannot be considered as a standalone technology in an organization that brings in profits.

- According to Steven J. Ramirez, Big Data is something that is more closely associated with Business. It isn’t any normal technology issue. This is another misconception on Big Data.

Merits of Big Data analysis

On a similar level of deployment of artificial intelligence, but business models use – business intelligence; is a program that runs permutations and combinations of clusters of acquired data. Larger companies find this useful in targeting their marketing strategies as well as prediction any changes in the consumer behavior.

Things that can be categorized as data are: Internet survey forms, click-baits, email advertising. Big Data is considered as the preferred tool citing the increased flexibility.

World-trade, economic fluctuations can be monitored using big data analysis. As a matter of fact political organizations use big data analysis in order to understand voters’ mind-set.

The branch of science which has helped humanity to envisage on an ever ending journey of increased expectancy rate – biomedical field has the highest necessity of Big Data analysis since hindered of calculations have to be performed.

If channelized properly there’s a greater scope for much more technological advancements.

These are the basic things you need to know on Big Data, Big Data Analytics and few Misconceptions on Big Data.

ZENROWS

ZenRows is a data extraction tool designed to easily collect content from any website with a simple call. It handles rotating proxies, headless browsers and CAPTCHAs.ZenRows bypass any anti-bot or blocking system to help you obtain the info you are looking for. For that, it includes several options such as Javascript Rendering and Premium Proxies. There is also the autoparse option for the most popular websites that will return structured data automatically. It will convert unstructured content into structured data (JSON output), with no code necessary.

- Easy to use interface to get the HTML from any website with a simple API call

- Rotating Proxies that ensures you will always be in stealth mode

- Antibot bypassing to bypass all major bot protection countermeasures

- A Fine-tuned Autoparse algorithm that automatically extracts data for you

- An Easy to integrate API to integrate with any programming language

HITACHIVANTARA

Hitachi Vantara brings cost-effective path for your digital transformation with it’s internet of things (IoT), cloud, application, big data and analytics solutions.

Integration Simplified:

- Drag-and-drop interface to create data pipelines

- Broad connectivity to virtually any data source

- Blend data wherever it is, on-premises or in cloud

- Flat files, RDBMS, big data, object stores, APIs

- Salesforce and Google Analytics

- Bulk load support for popular cloud data warehouses

- Operationalize R, Python, Scala and Weka models

- Scikit-learn, Spark MLlib, TensorFlow and Keras

- Scalability, containerization and security

Pentaho Business Analytics:

- In-memory data caching for interactive analysis

- Connectivity to on-premises or cloud sources

- Flat files, databases, big data, object stores

- Analyze outliers with filtering and zooming

- Open-source heritage, no vendor lock-in

- Rich library of interactive visualizations

- Flip between Apache Spark or MapReduce

- Multitenant, embeddable and secure reporting

- Mobile web experience with gesture support

KNIME

At KNIME, we build software to create and productionize data science using one easy and intuitive environment, enabling every stakeholder in the data science process to focus on what they do best.

- Build data science workflows

- Blend data from any source

- Shape your data

- Leverage Machine Learning & AI

- Discover and share insights

- Scale execution with demands

QUBOLE

Qubole’s cloud data platform helps you fully leverage information stored in your cloud data lake. Qubole intelligently automates and scales big data workloads in the cloud for greater flexibility.

- Single platform for end-to-end big data processing

- Support 10x more users and data without adding new administrators

- Lower your cloud costs by 50% or more

- Commitment to Open Source

- Security and Trust

STATWING

Efficient and Delightful Statistical Analysis Software for Surveys, Business Intelligence Data, and More.

- Simply upload your spreadsheet or dataset, then select the relationships you want to explore

- Statwing is designed solely for analyzing tables of data, so users finish days of analysis in minutes

- Statwing is built for analysts, so it chooses statistical tests automatically

- Statwing automatically visualizes every analysis, and enables easy export for PowerPoint

- And More.

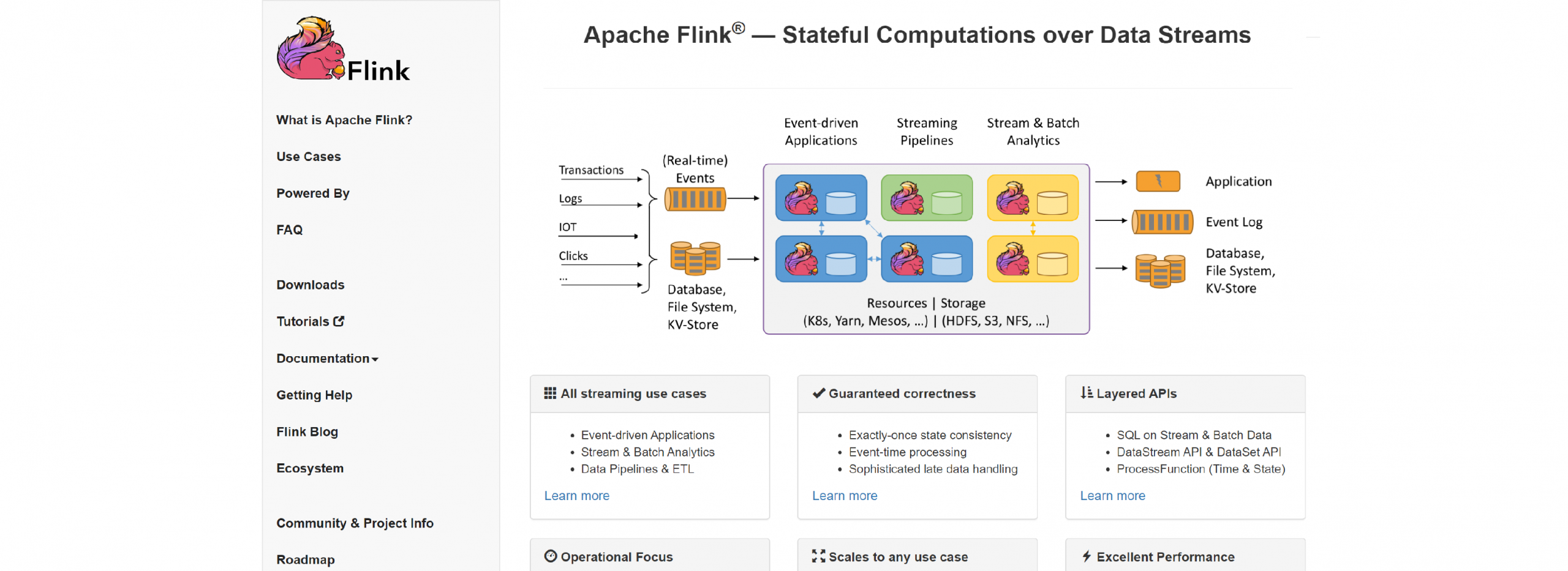

FLINK.APACHE

Apache Flink is a framework and distributed processing engine for stateful computations over unbounded and bounded data streams.

- Deploy Applications Anywhere

- Run Applications at any Scale

- Leverage In-Memory Performance

OPENREFINE

OpenRefine (previously Google Refine) is a powerful tool for working with messy data: cleaning it, transforming it from one format into another; and extending it with web services and external data.

- Explore Data

- Clean and Transform Data

- Reconcile and Match Data

RAPIDMINER

RapidMiner is a data science platform for teams that unites data prep, machine learning, and predictive model deployment.

Analysis of Big Data | Big Data Analysis

Analysis of Big Data | Big Data Analysis : Big data analytics is the process in which detailed analysis of large volumes of information or data to understand the market and the working in the field of analysis is done. For example, all sorts of information about a field of business are analysed to understand market patterns, customer needs and any other useful information that can be helpful for the business. Such a detailed analysis of big data is called big data analytics.

This complete analysis is very necessary to know your business better and can be helpful in decision making. There are four types of analytics out of which big data analytics is one. Let’s go through the other three simple forms of analytics.

Business Intelligence (BI)

Business intelligence simply provides information like standard business reports, ad hoc reports, OLAP and notifications based on the analytics. Simply this is a more basic form of analytics where just the near past reports like annual reports are analysed. There are two distinct types of tools usually used for business intelligence. They are termed as spreadsheets and reporting and querying. The data is first sorted out as spreadsheets with the help of applications in forms of rows and columns. The data is then analysed, sorted and extracted by reporting and querying tools available online. Various organizations sell such tools online for customer convenience. You just need to search on a search engine.

Big Data Business Intelligence

This is when large sets of data are used to provide reports similar to that of basic business intelligence (BI). This is just one step further analysis of the business intelligence analytics. This provides more deep reports than business intelligence as the reports are based on further past and current data of the business. It looks at buyers and their professional and personal profiles and their customer service. Such detailed study is big data business intelligence.

Big Analytics

This process is carried out to make a map about the future of your business. Big analytics is when processes like text mining, predictive modelling, forecasting and optimization are conducted to obtain foreseeing proactive reports for effective decision making. These analytics can spot out weaknesses, future issues, running trends on the basis of which effective decisions for the advancement of the company can be taken. Potential questions like, how will the next year be for business, subscriptions that can go off, the chances of new buyers, how customer will behave in future; are answered through this process.

Big Data Analytics

By using big data analytics only the relevant data from the large sets of data is extracted and then is studied further to provide hidden patterns, and market changes. This in turn enables the companies to foresee the future changes in the markets and make decisions accordingly. These analytics are rapidly gaining familiarity among modern businesses because of the effective results. Let us further analyse big data analytics and its benefits.

The Process

Various organizations conduct big data analytics to help companies with their information and analysis, so that the company can make decisions on the basis of the information revealed after the analysis.

Various fields are considered for analysis as mentioned below:

- Web Server Logs

- Social Media Content

- Social Network Activity

- Phone calls and details

- Email records

- Customer responses

These are some of the many factors that are analysed during big data analytics. Some companies may also conduct deeper analysis, including the factors like bank and transaction records.

The Force behind Big Data Analytics

Skilled experts are very important for good analysis. Individuals like data scientists and predictive analysis experts are required to deliver quality big data analytics. A big data analytics organization can go on a rapid downfall if it fails to meet the quality requirements of companies that need the analysis.

Importance of Big Data Analytics

Due to immense technological development and online business facilities, it is now very important for every business to do big data analytics to make effectively right decisions for the future of the company. For example, with many people using social network websites, analysis of the comments about your business on the social network websites can give an insight on what the customer feels about your company. Such insights from various aspects provide a future map for your company which you can then use to make a decision. Big data analytics are hence very important for the success of the business in today’s modern world.

10 Big Trends in Big Data Analytics

If you’re looking for a one word description for the Big Data Analytics trends of the year, it would be: more. In today’s superfast market, development of technologies takes hardly a few months or a few weeks. The business organizations need to keep up with the ever advancing pace or else they would be left behind. In order for you to stay parallel with the top trends of big data analytics, we’ve provided you with a list of same.

- Clouds: Previously, most of the data analysis was done in physical storage systems. With the advancement of technology, today most Big Data Analysis use cloud computing. It is faster and costs much less than physical systems. It helps in collecting real time data, analysing them and selecting the target customers based on factors such a demographic location and behaviours so that the marketers get the best response from their customers. The main advantages of cloud computing when it comes to big data analytics are the fact that it is safe and accessible at any point of time.

- Hadoop: Hadoop is turning into a general-purpose data, operating system due to the evolution of a distributed analysis framework into distributed resource managers. This means that the popularity of Hadoop is going to increase as graph analysis, in memory and stream processing will now be doable with the help of Hadoop and will perform well doing the same.

- Big Data Hub: Previously, people used to prepare certain data heads and classify the data at bay according to those heads. But the new trends in big data analytics don’t work in the same way. Nowadays the data are stored in the big data hubs or data lakes that are available in cloud computing and which allows a broader classification of data which is more accurate with the already available data in the hub.

- Prognostic Analytics: Although the amount of data for big data analytics is increasing each day, the computers are becoming more and more powerful in handling these data. This helps the analyzers to come up with newer techniques of data analysis depending upon the behavior each day leading to more and more accurate analysis of data. This advancement has helped in solving problems easily and with new solutions.

- SQL on Hadoop: With the utilization of SQL tools in Hadoop, coders can now use the system for analyzing almost every data available in the market. The language has made the system easy for “iterative analytics” where the questions and answers can be presented on after the other.

- NoSQL: NoSQL or Not only SQL is a high performance alternative to SQL where the relationship between business and customers can be analyzed in a faster and more direct method. It’s gaining popularity due to the fact that it has special customized clouds, is lightweight and is open-source.

- Deep Learning: Available with its own algorithm, Deep Learning is one of the fast emerging methods of neural network based machine learning techniques. Deep Learning enables the computers to utilize advanced analytical techniques to analyze the data. This helps the computers to scoop out the data which are of interest to the client from a pool of uncategorized data. The machine doesn’t need any kind of preprogramming to analyze the data and it can recognize a huge range of data.

- In-Memory Analytics: This is one of the most secure and fast way of big data analytics used for analyzing data. HTAP or hybrid transaction analytical processing is a part of in-memory analytics that are being used hugely by the business organizations today. Although it may be one of the fastest techniques, it has certain shortcomings like all the data needs to be under the same database to be analysed by HTAP. The data today are spread across a huge range of data base and clumping them into one may be a little problematic. Another problem with the in memory analytics is the fact that bringing it in only increases one more system to be taken care of.

- Fluid Analysis: Fluid Analysis allows big data analytics systems to be adaptable to the ever changing situations by leaving scope for integration of the new developments that are occurring each day thus keeping the system updated at any point of time.

- Multi-polar Analysis: This is probably one of the biggest trends of big data analytics in current market, which allows layered analysis of data so that the various steps are clearly marked and available for future utilization.