Every enterprise today runs into a problem of handling large chunks of data. It is very difficult to keep a check on every bit of information that every enterprise record in the course of its lifetime. Where there is necessity, there is invention and similar is the case with Hadoop. Primarily built as different software to help search engines handle the large amounts of information and provide quicker search results, Hadoop was developed with a simple idea of using automated systems to handle the data and process it for tasks like search and analyze.

This proves to be a very efficient method for handling the big data, as the data is handled and processed according to your requirement by using an automated system that provides 100% accuracy. Finally the work load of handling millions of bits of important information could be done efficiently without any error or loss of the data. With the introduction of automation it becomes very easy to track the data, create backups and analyze the data. Big data can be irregular and random, so it is best handled by an automated system than humans.

The Start of Hadoop

Initially Hadoop was a part of a project called Nutch, which was developed by Google to cluster millions of pieces of information so that the complete searching process could be done in an automated system. Earlier the same process was carried out by humans, but then the internet industry started rocketing high. The data increased from hundreds to millions and handling such massive amounts of data started becoming impossible for humans. The Nutch project aimed at clustering all the information into smaller unit for quicker search results.

Whenever a keyword is entered, the software would search in all the clusters for the matching words and send out the results in a matter of seconds. This massively decreased the errors made by humans due to large amounts of information. The Nutch project was later divided in two separate frameworks out of which one that was handled and storing large chunks of data, was named Hadoop.

How Hadoop Works?

Earlier, paper copies of the data were saved in enterprises, but as the information increases the data gets complicated and storing them safely is another hassle. The paper gets damaged in the course of time and not only that, but also organizing them accordingly so that every bit of information is accessible is a task next to impossible. Loss of data can result in permanent loss of the information and this also proves to be risky for an enterprise. Hadoop found a simple yet effective solution for the problem of handling large chunks of important data. Hadoop works as an open source software framework for handling and analyzing the big data. The software aims at data which is complex and irregular in a way that it cannot be classified or organized in tables easily. It easily stores data and avails easy and fast processing for its users.

Hadoop for everyone

Hadoop distributes large chunks of data into smaller clusters and assigns a name to each cluster so that the system can quickly process the data and present the information as commanded by the user. From analytics to intelligent search results, Hadoop can handle it all. In the online interface the data products are stored in Hadoop clusters and when a keyword is entered, the system analyzes every cluster to find out results that match the keywords. As the data is stored in smaller clusters, it becomes easy to search and analyze the information in clusters, as a result of this Hadoop can easily process the data and provide results faster. The Hadoop framework is written in Java, however, the user can use a programming language with Hadoop streaming.

Hadoop proves to be a perfect tool in the world of big data. It has numerous benefits like a vast storage support to store terabytes of data. One can use Hadoop to gather data from different sources like social media, emails, clickstream, etc. It also supports many different types of analytics to process the data. Another major advantage is that Hadoop replicates a data stored in a node, with all the other nodes. As a result of this, there is always a backup of the files in case of data failure or loss. Hadoop provides all these benefits along with a remarkable super fast experience. Hadoop is a very essential tool that is fast, flexible, scalable, cost efficient and easy to use. This makes Hadoop a must for every enterprise if they wish to deal the big data effectively and efficiently. Perfect big data analysis can reveal many secrets hidden in the chunks of data that can be used for the benefit of the business; and with Hadoop in hand you surely don’t have to worry about perfect big data analytics.

QLIK

Discover the only end-to-end data integration and analytics platform built to transform your entire business.

- Enterprise-grade security and governance

- Flexible and scalable architecture

- Deploy in any environment

- Open APIs / API Library

- Open and extensible platform

- Embedded analytics

CLOUDERA

Cloudera delivers an Enterprise Data Cloud for any data, anywhere, from the Edge to AI.

- Cloudera Manager — making Hadoop easy

- Multi-tenant management and visibility

- Extensible integration

- Trusted for production

- Built-in proactive and predictive support

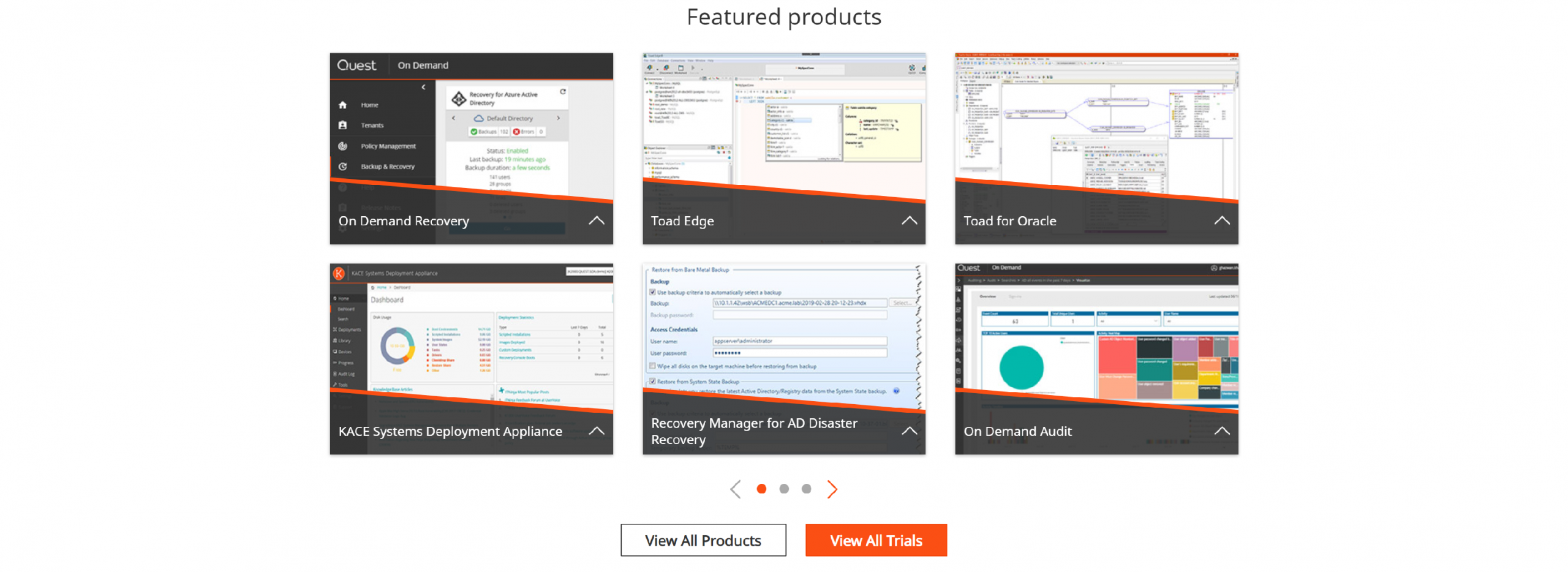

QUEST

Simplify IT management and spend less time on IT administration and more time on IT innovation.

- Capabilities

- Data access and querying

- Self-service data preparation

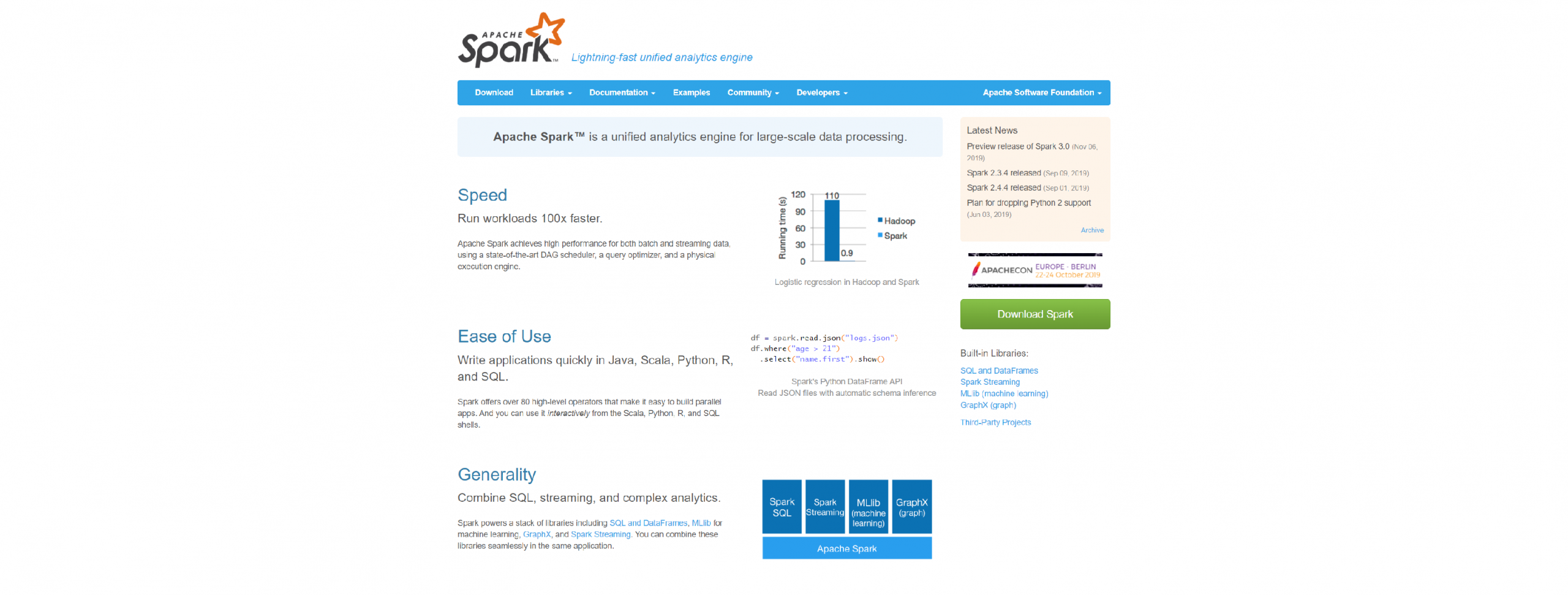

SPARK

Apache Spark is a unified analytics engine for big data processing, with built-in modules for streaming, SQL, machine learning and graph processing.

- Run workloads 100x faster

- Write applications quickly in Java, Scala, Python, R, and SQL

- Combine SQL, streaming, and complex analytics

- Spark runs on Hadoop, Apache Mesos, Kubernetes, standalone, or in the cloud. It can access diverse data sources

IMPALA

Apache Impala is a modern, open source, distributed SQL query engine for Apache Hadoop.

- Do BI-style Queries on Hadoop

- Count on Enterprise-class Security

- Unify Your Infrastructure

- Retain Freedom from Lock-in

- Implement Quickly

- Expand the Hadoop User-verse

MAHOUT

Apache Mahout (TM) is a distributed linear algebra framework and mathematically expressive Scala DSL designed to let mathematicians, statisticians, and data scientists quickly implement their own algorithms.

- Application Code

- Samsara Scala-DSL (Syntactic Sugar)

- Logical / Physical DAG

- Engine Bindings and Engine Level Ops

- Native Solvers