Business Intelligence is again getting back lots of attention. Early in January Gartner has revealed five business intelligence prediction for 2017. One of the major business intelligence prediction was that “by 2019, business units will control at least 40 per cent of the total budget for BI”. This is almost half of the IT budget, which is allocated to business intelligence. There have been more companies who are currently implementing business intelligence solution or do have strategic initiative to implement business intelligence analytics in the near future. Along with this, comes MDM or master data management, SOA or service oriented architecture, content management and enterprise information management ideas. What this combination produces is a consolidated view of all the business performance and business information activities. Creating a single source, where you could get customers, their daily orders, payments, finance, behavior, reports, documents and all financial information for business performance reporting information.

Making these reports available to the end users through an internet or web enabled architecture will enable easy access to the information and will reduce costs by avoiding the need of installing a reporting client at every desktop or laptop. The value an enterprise business intelligence framework is that it enables an easier process in finding answers for many business performance questions for analyzing and predicting how well the business is doing. This visibility is much needed for business and executive leaders to forecast and steer the company to the right direction.

Tremendous amount of ground work need to be complete in order to succeed in a major BI initiative. But how do you go with implementing a business intelligence framework? Where do we start? How do we start? – are some of the key questions to answer before attempting to implement an enterprise business intelligence framework.

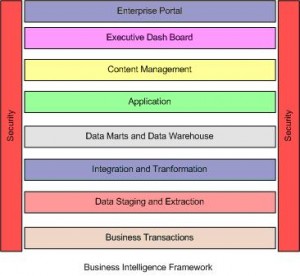

Before attempting to answer those questions, we may want to understand what makes a business intelligence framework. The basic structure of a business intelligence frame work has not changed that much since its inception years ago. The process still remains from identifying and extracting data, profiling and cleaning data, matching, conforming and loading to a data warehouse and may be further into a data mart for reporting. What changed is the method and tools available in the market, the hours it took to build the warehouse data and the time we spend to drill down details. A basic BI framework is shown below.

The core of the framework is the data mart and data warehouse. But, in order to get there the foundation starts at capturing business transactions data. This layer itself may be spread across multiple sources, multiple infrastructures, multiple locations and multiple operating systems. Identifying these sources and verifying the need of those data is the first step towards enabling a business intelligence framework. Once these sources are identified, next step is to integrate these data sources and find a method to transform and move these diverse data into a single source.

This is done in the next layer of staging and extraction. The data is bulk loaded into a staging area where extraction tool will profile, scrub, transform, validate and clean data according to business rules and load into a normalized data mart or sometimes called Operational Data Source (ODS). At this stage the business may have limited opportunity to slice and dice the operational data depending on the requirement until the data is finally loaded into a dimensional model data warehouse. This is the end state where all the business data is consolidated and stored. An application layer sits on top of data warehouse layer to enable the access of these data for analysis. These applications can be simple reporting applications that could produce reports based on a queries or complex ECM (Enterprise Content Management) software which can produce powerful analytical capabilities. The content management layer is closely integrated with the application layer and sometimes these are same or operate on same product or vendor. But what the content layer brings is the deep analytical drill down capabilities along with document management, file sharing and role based access. Much of these tools available today uses cube based method where an intermediate repositories are created based on the reporting needs. These cubes of recent data can be cached in a web server for performance and are made available to the end users through web clients. A simple java based applet is sometimes introduced at client site that establish the connection between client and the server.

Yet another layer that could utilize the application is the executive dash board. This layer provides role based access to entire data in a simplified dashboard. These dash boards are designed based on the key performance indicators (KPI) and metrics of the business that are collected in the planning stage. A collection of all including application, content and dash boards can be now put together in an enterprise portal that could provide access to both internal and external clients. Sometimes these can be collaborated with a single sign-on solution to leverage a common authentication for access. Finally, to make the framework robust, tight security need to be defined. These may include SOX compliance, data governance, HIPAA and many other policies that need to be evaluated and implemented based on the business. Some of the tools available are IBM Tivoli Access Manager, Netegrity Site Minder etc.

Building a similar business intelligence framework does not resolve the problem of information management. Companies, after creating multi terabytes data warehouse sometimes does not have a common customer source. The single source of customer data can be spread across multiple domains. Most of them could reside on a real-time order entry system, some of them on ERP based environment, and some could be on external systems. Getting these data to the warehouse will take time and sometimes cycles. So how do we consolidate a single source of customer data for business to verify and report on real-time?

Thanks to SOA, we now have ability to connect these diverse data points. By utilizing MOM (Message oriented middleware) we could capture these data and feed them into an EAI (Enterprise Application Integration) environment that connects with the end framework layer on a real-time basis. A combination of tools such as IBM MQ Series, NetWeave, Modulus, PeerLogic, JCAPS (SeeBeyond), Vignette, BEA, WebMethods, TIBCO, Sonic can be used to integrate a real-time environment to the business intelligence framework. Scheduler software like IBM Tivoli, Embarcadero, Cybermation, BMC, AppWorx etc may be used to monitor and connect all these business processes and to avoid manual errors.

Apart from these, a solid strategy should be there to manage data movements. This could range from getting data from source, loading to staging, ODS, warehouse and archive. A common problem that we face around this area is the diversity of sources and the tools available to integrate with them, especially when one of the sources, in most case, is a legacy mainframe system. Thus, we end up having ETL tools that could easily integrate with some source and left out having with only option of a flat file for other legacy sources. To simplify these process, lets follow the keep it simple rule. By that, leverage the flat file for all sources avoiding complexity and keeping it the same “flat file technology” for initial source to stage transfer. But, this, by all means, should be followed only if we cannot pull data directly from the source through an ETL tool. In all other case, an ETL tool should reach out directly to the source and get the data to the staging or sometimes to ODS. Additional security policies should be considered for this flat file transfers.